You Think Global SQL Is Just Cross‑Region Writes… It Is Not

Global SQL is not broadcasting writes. It is about time control and coordination… and most engineers fail because they copy the API instead of the architecture

TLDR

Global SQL must coordinate time, consensus and commits, not hope for eventual alignment

Spanner uses TrueTime (a global time API), Paxos consensus and 2 phase commit to guarantee order

Multi version concurrency control gives lock free snapshot reads across regions

Synchronous replication and quorum reads and writes keep data durable but add controlled latency

Placement rules, declarative intent and staged rollout contain blast radius. Bad policy can still roll global if ignored.

How it works

S1 Intent service

What: Captures a client’s write intent (what row, constraints, scope). This is the entry point in the Frontend that parses SQL and assigns key ranges.

Why: Without explicit intent, writes scatter unpredictably. Intent ensures the system knows all participants for the commit.

How:

Resolves the tablet (key-range shard) and Paxos group for key

42(hypothetical example) via the directory metadata.Packages the mutation and scope for the coordinator.

Complexity: Lookup O(log n) using metadata index. Naïve broadcast is O(n²).

Example:

INSERT INTO Orders VALUES(42, ‘paid’)finds the tablet owning key42and sends the intent.Next: Call the TrueTime service to get time bounds.

Incident Takeaway 2024–2025: Corrupt metadata cache once routed writes incorrectly. Fix: TTL on metadata and auto refresh.

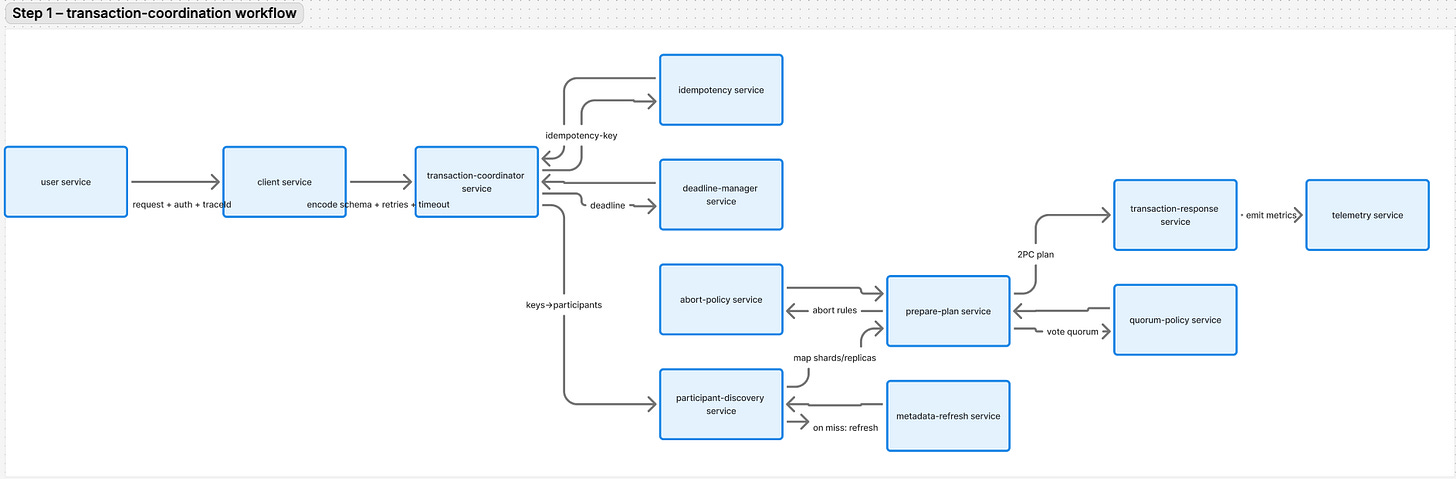

A. user service handles the incoming request, validates auth, attaches trace context and captures metadata.

B. client service wraps that intent into a structured RPC call with schema, retries and timeout budgets.

C. transaction-coordinator breaks the request into smaller operations and assigns them to participants.

D. participant-discovery locates which shards or replicas own the data for those operations.

E. prepare-plan constructs a 2-phase commit plan that defines voting and logging.

F. transaction-response collects participant acknowledgments and returns a single verdict to the client.

S2 Time service (TrueTime)

What: Provides a

[earliest, latest]time interval; the uncertainty ε defines commit wait

Note - ε is the clock’s uncertainty window, so if your clock may drift by 3 milliseconds, Spanner waits 6 so every replica sees the commit in the same time frame.

Why: Coordinated timestamps ensure external consistency. No ordering gaps means no ghost reads.

How:

Each node calls

TT.now()and receives an interval.Waits ε before commit to ensure time has passed across regions

Complexity: Local call O(1). Global call O(k) for k regions. Naïve NTP sync would degrade to O(n²).

Example: If

[earliest=10:00:00.001, latest=10:00:00.007], commit waits ~6 ms to be safeNext: Coordinator picks up the transaction and begins prepare.

Incident Takeaway 2024–2025: A TrueTime daemon drift once widened ε; cross checking clocks kept commits safe.

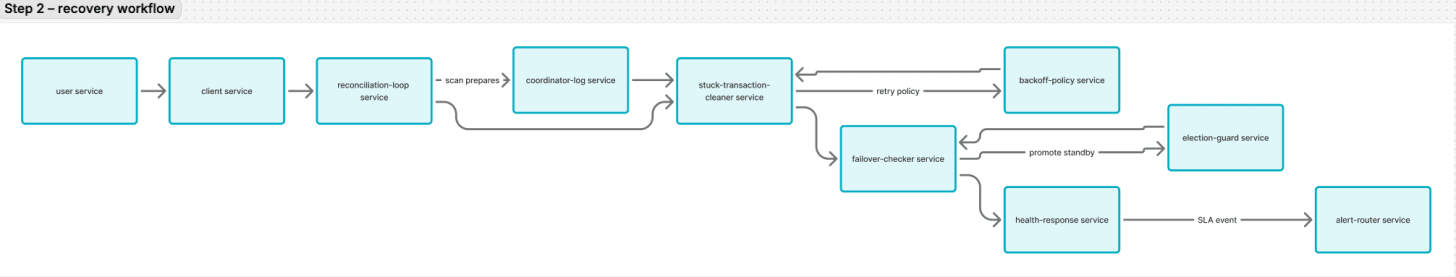

A. reconciliation-loop scans for transactions that never finished because of timeouts or crashes.

B. stuck-transaction-cleaner replays logs, retries failed steps or aborts based on quorum rules.

C. failover-checker promotes a standby region when the primary stops responding.

D. health-response reports the cluster’s health metrics for visibility.

S3 Transaction service (Coordinator)

What: Orchestrates 2 phase commit across Paxos groups (participants). It tracks the global transaction.

Why: Without coordination, partial commits create divergence.

How: